Transformer-Based Generative Models

Like I'm a 10 year old explainer

Transformers: The Super Smart Text Machines 🤖

Imagine you're reading a really long story, and halfway through, someone asks you what the main character's pet was called from page 2. That's hard to remember, right? Computers had the same problem!

The Old Way (RNNs) The old computer programmes were like someone reading a book word by word, trying to remember everything in order. But by the time they got to page 100, they'd forgotten what happened on page 1! It's like playing that memory game where someone says "I went to the shops and bought an apple," then the next person adds something, and by the 20th person, nobody remembers the apple!

Enter the Transformer! Transformers are like having a brilliant student who can look at the ENTIRE book at once and instantly spot which bits are important. They have a superpower called "attention" - it's like having a magical highlighter that automatically marks the important bits!

Here's how they work:

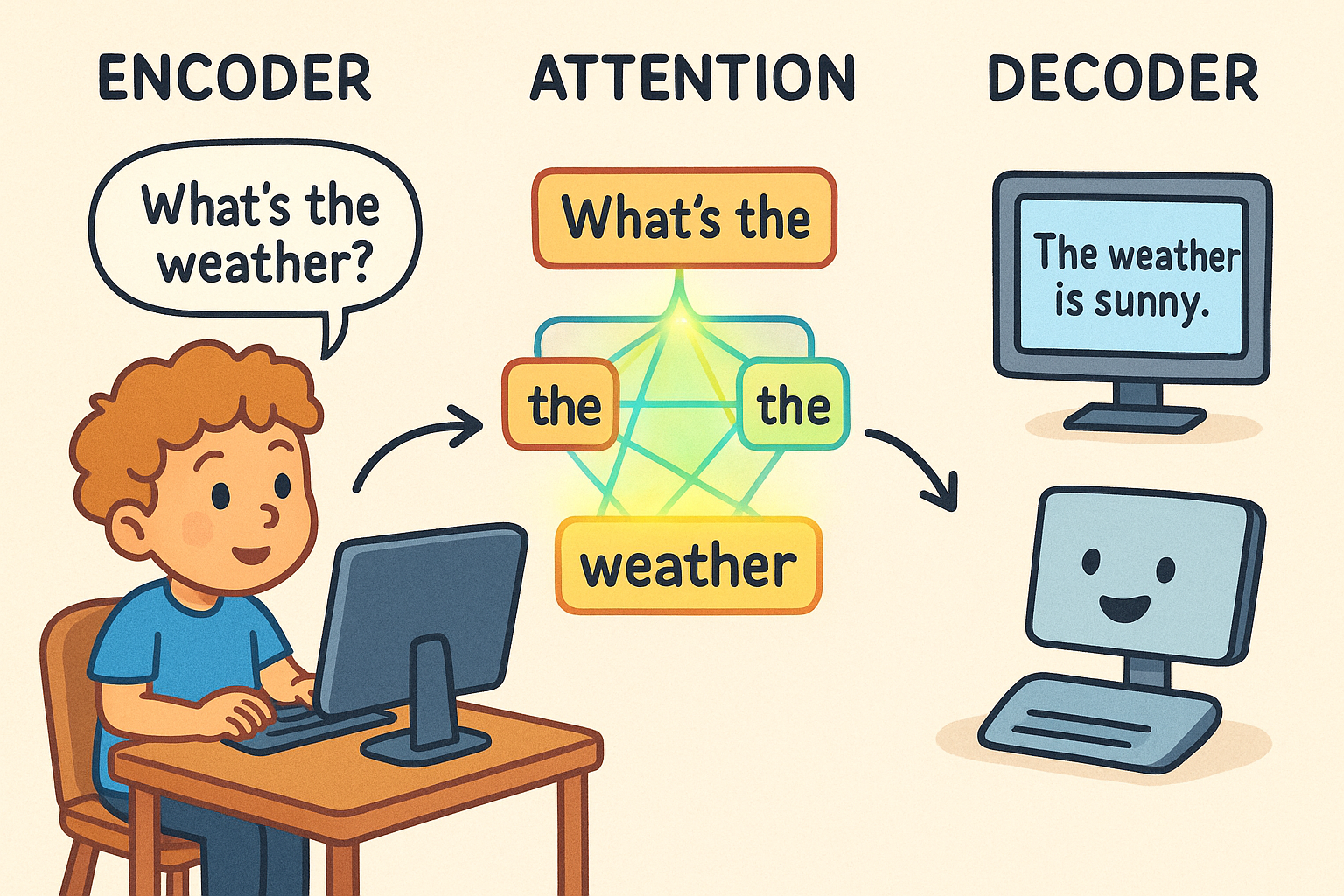

The Encoder (The Reader) 📖 When you type something like "What's the weather?", the encoder reads it and creates a special map of what all the words mean together. It's like turning your sentence into a treasure map where X marks the important spots.

The Attention Mechanism (The Magic Highlighter) ✨ This is the clever bit! Instead of reading left to right like we do, it can instantly see that "weather" is the most important word and that "what" at the beginning connects to the question mark at the end. It's like having X-ray vision for sentences!

The Decoder (The Writer) ✍️ The decoder takes that treasure map and writes a response. It keeps checking back with the encoder's map to make sure it's staying on topic. It's like having a friend who keeps reminding you what the question was whilst you're answering it.

Why They're Brilliant

- They never forget the beginning of your question, even if it's super long

- They can understand when words at the end relate to words at the beginning (like knowing "it" means "the dog" from earlier)

- They can do loads of sentences at once, like having 100 students each reading different books simultaneously!

Real-Life Magic When you ask ChatGPT to write a story, or when Google Translate converts languages, or when your phone predicts your next word - that's transformers at work! They're so good they can even create pictures (DALL-E), make music, and write computer code.

Think of transformers as the difference between someone trying to understand a film by watching it through a keyhole (one bit at a time) versus someone who can see the whole screen at once and instantly spot how everything connects together!